User Tools

Sidebar

Table of Contents

NPU Prebuilt Demo Usage

Prebuilt example demos for interacting with the Amlogic NPU using OpenCV4

Install OpenCV4

Update your system and install the OpenCV packages.

$ sudo apt update $ sudo apt install libopencv-dev python3-opencv

Get NPU Demo

Get the demo source: khadas/aml_npu_demo_binaries

$ git clone --recursive https://github.com/khadas/aml_npu_demo_binaries

The NPU demo contains three examples:

detect_demo- A collection ofyoloseries models for camera dynamic recognition.detect_demo_picture- A collection ofyoloseries models that identify pictures.inceptionv3- Identify the inception model of the picture.

Inception Model

The inception model does not have any library dependencies and can be used as is.

Enter the inceptionv3 directory.

$ cd aml_npu_demo_binaries/inceptionv3 $ ls dog_299x299.jpg goldfish_299x299.jpg imagenet_slim_labels.txt VIM3 VIM3L

imagenet_slim_labels.txt is a label file. After the result is identified, the label corresponding to the result can be queried in this file.

Depending on your board, enter the VIM3 or VIM3L directory accordingly. Here is the VIM3 as an example.

$ ls aml_npu_demo_binaries/inceptionv3/VIM3 $ inceptionv3 inception_v3.nb run.sh $ cd aml_npu_demo_binaries/inceptionv3/VIM3 $ ./run.sh Create Neural Network: 59ms or 59022us Verify... Verify Graph: 0ms or 739us Start run graph [1] times... Run the 1 time: 20.00ms or 20497.00us vxProcessGraph execution time: Total 20.00ms or 20540.00us Average 20.54ms or 20540.00us --- Top5 --- 2: 0.833984 795: 0.009102 974: 0.003592 408: 0.002207 393: 0.002111

By querying imagenet_slim_labels.txt, the result is a goldfish, which is also correctly identified. You can use the method above to identify other images.

Yolo Series Model

Install and uninstall libraries

The yolo series models need to install the library into the system. Both the detect_demo and detect_demo_picture examples require this procedure.

You can follow the steps to either install or uninstall the libraries.

Install libraries:

$ cd aml_npu_demo_binaries/detect_demo_picture $ sudo ./INSTALL

Uninstall libraries:

$ cd aml_npu_demo_binaries/detect_demo_picture $ sudo ./UNINSTALL

Type Parameter Description

The type parameter is an input parameter that must be selected whether it is to use camera dynamic recognition or to recognize pictures. This parameter is mainly used to specify the running YOLO series model.

0 : yoloface model 1 : yolov2 model 2 : yolov3 model 3 : yolov3_tiny model 4 : yolov4 model

Operating Environment for NPU demo

NPU Demo can run in X11 Desktop or framebuffer mode, just select the corresponding demo to run.

- The demo with fb is running in framebuffer mode.

- The demo with x11 is running in X11 mode.

Demo examples

detect_demo_picture

$ cd aml_npu_demo_binaries/detect_demo_picture $ ls 1080p.bmp detect_demo_x11 detect_demo_fb INSTALL lib nn_data README.md UNINSTALL

Run

Command format of the picture.

$ cd aml_npu_demo_binaries/detect_demo_picture $ ./detect_demo_xx -m <type parameter> -p <picture_path>

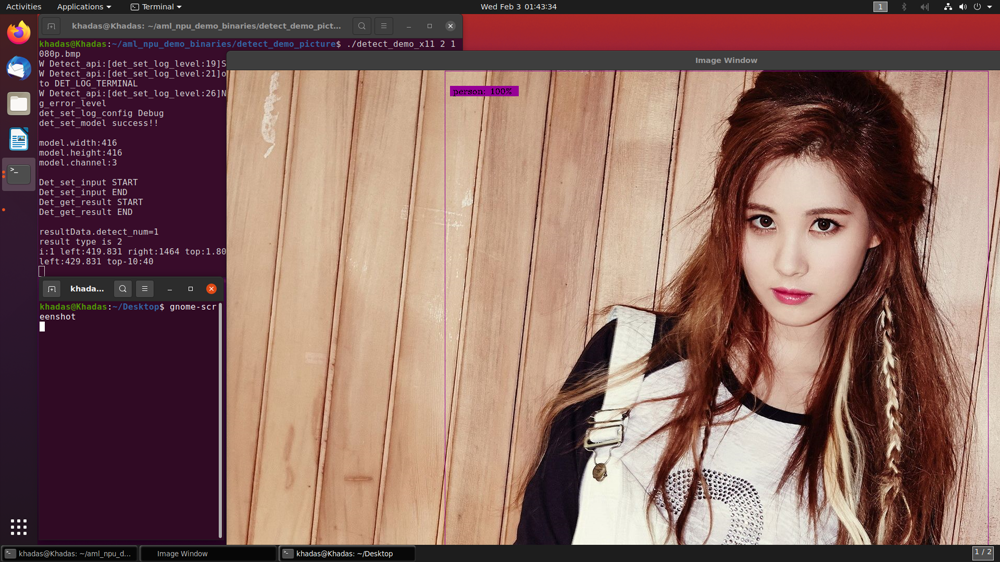

Here is an example of using OpenCV4 to call the yolov3 model to recognize pictures under x11.

$ cd aml_npu_demo_binaries/detect_demo_picture $ ./detect_demo_x11 -m 2 -p 1080p.bmp

The results of the operation are as follows.

detect_demo

You should use the demo of usb to use the USB camera, and the demo of mipi to use the mipi camera.

Run

Command format for camera dynamic recognition.

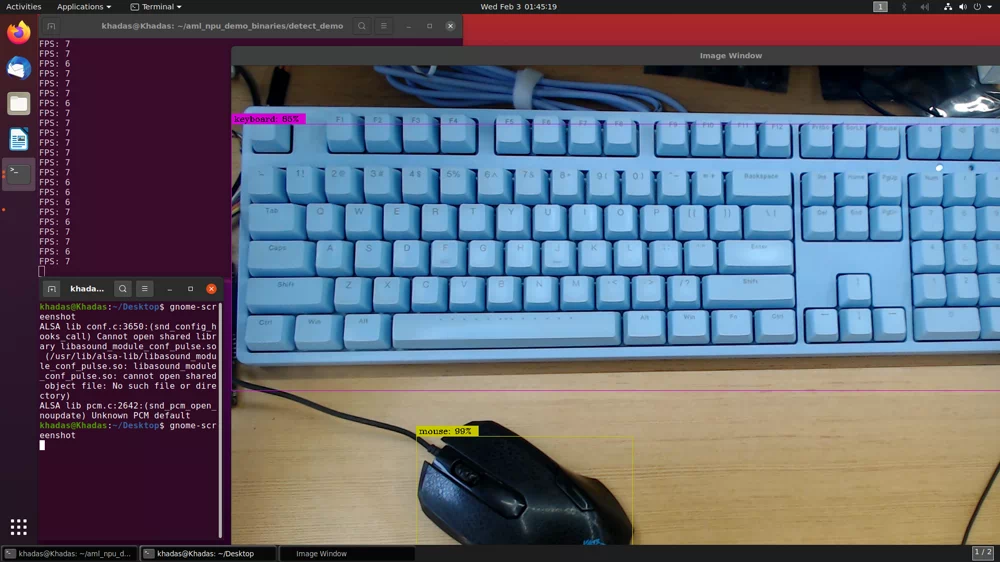

$ cd aml_npu_demo_binaries/detect_demo $ ./detect_xx_xx -d <video node> -m <type parameter>

Here is an example of using OpenCV4 to call yolov3 in the x11 environment using a USB camera.

$ cd aml_npu_demo_binaries/detect_demo $ ./detect_demo_x11_usb -d /dev/video1 -m 2

After turning on the camera, the recognition result will be displayed on the screen.