User Tools

Sidebar

Table of Contents

YOLOv8n OpenCV Edge2 Demo - 2

Introduction

YOLOv8n is an object detection model. It uses bounding boxes to precisely draw each object in image.

Inference results on Edge2.

Inference speed test: USB camera about 52ms per frame. MIPI camera about 40ms per frame.

Train Model

Download YOLOv8 official code ultralytics/ultralytics

$ git clone https://github.com/ultralytics/ultralytics.git

Refer README.md to train a YOLOv8n model. My version torch==1.10.1 and ultralytics==8.0.86.

Convert Model

Build virtual environment

The SDK only supports python3.6 or python3.8, here is an example of creating a virtual environment for python3.8.

Install python packages.

$ sudo apt update $ sudo apt install python3-dev python3-numpy

Follow this docs to install conda.

Then create a virtual environment.

$ conda create -n npu-env python=3.8 $ conda activate npu-env #activate $ conda deactivate #deactivate

Get convert tool

Download Tool from rockchip-linux/rknn-toolkit2.

$ git clone https://github.com/rockchip-linux/rknn-toolkit2.git $ git checkout 9ad79343fae625f4910242e370035fcbc40cc31a

Install dependences and RKNN toolkit2 packages,

$ cd rknn-toolkit2 $ sudo apt-get install python3 python3-dev python3-pip $ sudo apt-get install libxslt1-dev zlib1g-dev libglib2.0 libsm6 libgl1-mesa-glx libprotobuf-dev gcc cmake $ pip3 install -r doc/requirements_cp38-*.txt $ pip3 install packages/rknn_toolkit2-*-cp38-cp38-linux_x86_64.whl

Convert

After training model, modify ultralytics/ultralytics/nn/modules/head.py as follows.

- head.py

diff --git a/ultralytics/nn/modules/head.py b/ultralytics/nn/modules/head.py index 0b02eb3..0a6e43a 100644 --- a/ultralytics/nn/modules/head.py +++ b/ultralytics/nn/modules/head.py @@ -42,6 +42,9 @@ class Detect(nn.Module): def forward(self, x): """Concatenates and returns predicted bounding boxes and class probabilities.""" + if torch.onnx.is_in_onnx_export(): + return self.forward_export(x) + shape = x[0].shape # BCHW for i in range(self.nl): x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1) @@ -80,6 +83,15 @@ class Detect(nn.Module): a[-1].bias.data[:] = 1.0 # box b[-1].bias.data[:m.nc] = math.log(5 / m.nc / (640 / s) ** 2) # cls (.01 objects, 80 classes, 640 img) + def forward_export(self, x): + results = [] + for i in range(self.nl): + dfl = self.cv2[i](x[i]).contiguous() + cls = self.cv3[i](x[i]).contiguous() + results.append(torch.cat([cls, dfl], 1).permute(0, 2, 3, 1).unsqueeze(1)) + # results.append(torch.cat([cls, dfl], 1)) + return tuple(results) +

If you pip-installed ultralytics package, you should modify in package.

Create a python file written as follows to export onnx model.

- export.py

from ultralytics import YOLO model = YOLO("./runs/detect/train/weights/best.pt") results = model.export(format="onnx")

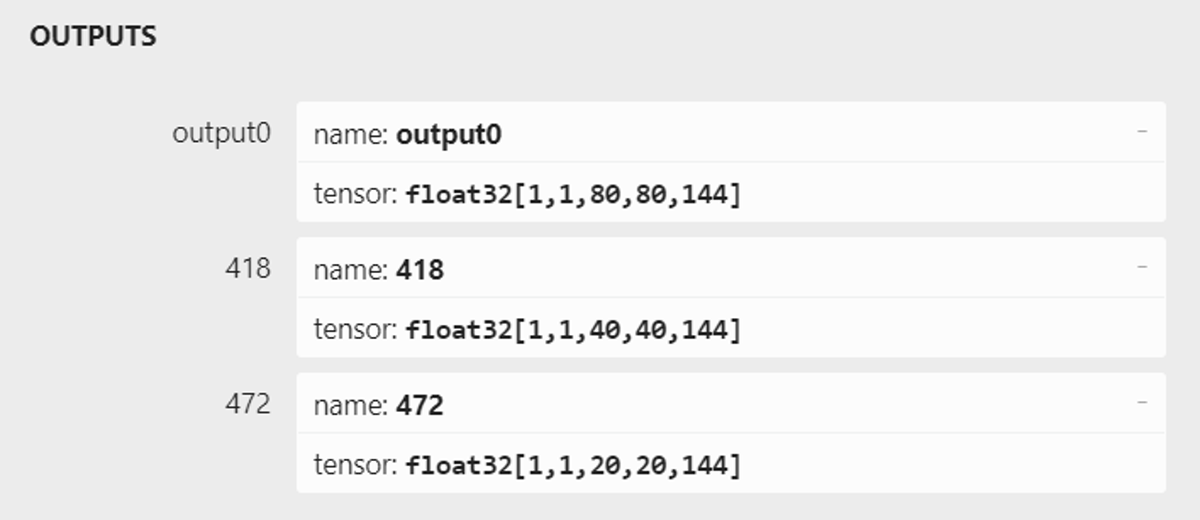

Use Netron to check your model output like this. If not, please check your head.py.

Enter rknn-toolkit2/examples/onnx/yolov5 and modify test.py as follows.

- test.py

# Create RKNN object rknn = RKNN(verbose=True) # pre-process config print('--> Config model') rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]], target_platform='rk3588') print('done') # Load ONNX model print('--> Loading model') ret = rknn.load_onnx(model='./yolov8n.onnx') if ret != 0: print('Load model failed!') exit(ret) print('done') # Build model print('--> Building model') ret = rknn.build(do_quantization=True, dataset='./dataset.txt') if ret != 0: print('Build model failed!') exit(ret) print('done') # Export RKNN model print('--> Export rknn model') ret = rknn.export_rknn('./yolov8n.rknn') if ret != 0: print('Export rknn model failed!') exit(ret) print('done')

Run test.py to generate rknn model.

$ python3 test.py

Run NPU

Get source code

Clone the source code from our khadas/edge2-npu.

$ git clone https://github.com/khadas/edge2-npu

Install dependencies

$ sudo apt update $ sudo apt install cmake libopencv-dev

Compile and run

Picture input demo

Put yolov8n.rknn in edge2-npu/C++/yolov8n/data/model.

# Compile $ bash build.sh # Run $ cd install/yolov8n $ ./yolov8n data/model/yolov8n.rknn data/img/bus.jpg

Camera input demo

Put yolov8n.rknn in edge2-npu/C++/yolov8n_cap/data/model.

# Compile $ bash build.sh # Run USB camera $ cd install/yolov8n_cap $ ./yolov8n_cap data/model/yolov8n.rknn usb 60 # Run MIPI camera $ cd install/yolov8n_cap $ ./yolov8n_cap data/model/yolov8n.rknn mipi 42

60 and 42 are camera device index.

Camera input multithreading demo

Put yolov8n.rknn in edge2-npu/C++/yolov8n_cap_multithreading/data/model.

# Compile $ bash build.sh # Run USB camera $ cd install/yolov8n_cap $ ./yolov8n_cap data/model/yolov8n.rknn usb 60 2 # Run MIPI camera $ cd install/yolov8n_cap $ ./yolov8n_cap data/model/yolov8n.rknn mipi 42 2

The last num, 2, is the number of threads.

If your YOLOv8n model classes is not the same as coco, please change data/coco_80_labels_list.txt and the OBJ_CLASS_NUM in include/postprocess.h.